The rise of AI and its consequences, as seen from an instructional perspective

We've all seen the remarkable things that AI can do with photos and videos. Once I return to teaching college students this fall, I'm confident I'll also discover what AI can do to "help" them write research papers and the like.

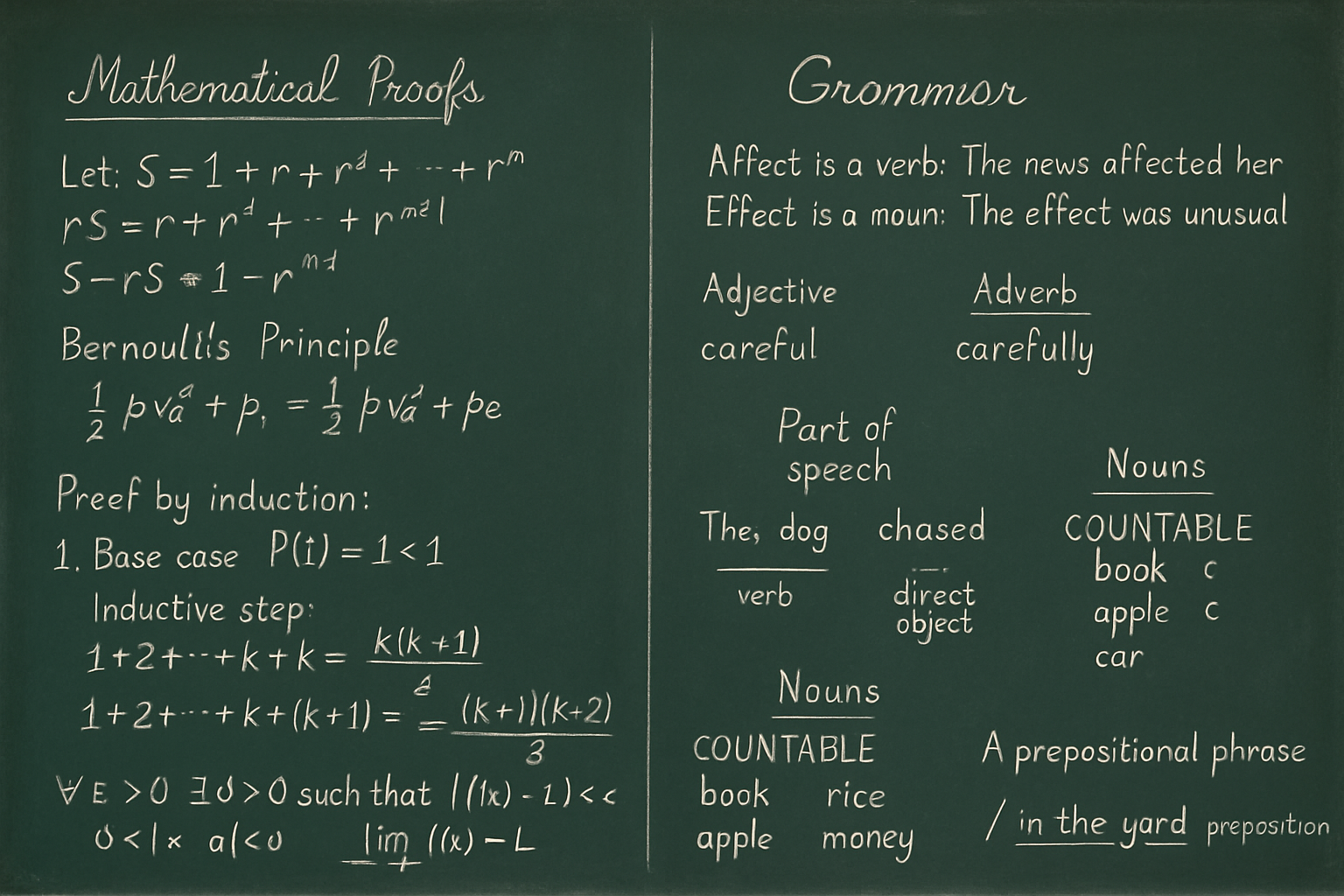

Witness the above image, which was generated using ChatGPT. The prompt was "Generate an image of a classroom chalkboard with both complex mathematical proofs and grammar rules neatly handwritten in chalk." Notice the incorrect spellings and errors in the math. AI simply makes things up.

During a work trip earlier this year to Iceland for an aviation blog for which I often write, I had the opportunity to interview the entire C-suite staff at an Icelandic airline's headquarters. I thought it presented a great opportunity to try one of the many AI note transcription apps available for my iPhone. So I found one that had a solid rating, tested it, and then paid for a month subscription.

You're probably guessing that you're going to read about a moral to this tale, and you're correct.

During the meeting, at which there were close to 10 people from the airline - the CEO, CFO, strategy officer, etc., along with a half-dozen journalists from around the world. There were plenty of heavily-accented English speakers. The app did remarkably well at transcribing those speakers' comments. Where it failed was consistently identifying them.

And guess who saw how good the transcription looked and got lazy about his written notes? Yep. Yours truly.

The notes wound up being a mess of good quotes without proper attribution. I managed to sort it all out via my meager written notes and my memory of who said what, as I started working on it straight away while my memory was fresh.

But reliance on AI was a near disaster. Lesson learned.

Another lesson was that AI is making us less capable, and using it has demonstrable negative effects on both cognition and critical thinking skills.

As an aside, it also has a deleterious effect on the environment - all those data centers that are necessary to house the vast banks of computer hardware that's needed to generate that right-armed octo-cat your kid dreamed up for their social media feed require lots of physical space and an alarming amount of electricity.

Back to the main point, though, these AI systems aren't actually intelligent, that's simply marketing. They're cleverly designed algorithms that pull from vast databases of pilfered resources - books, magazines, blogs, websites of all kinds, photos, videos, basically everything available online, and they then basically guess at what you've requested.

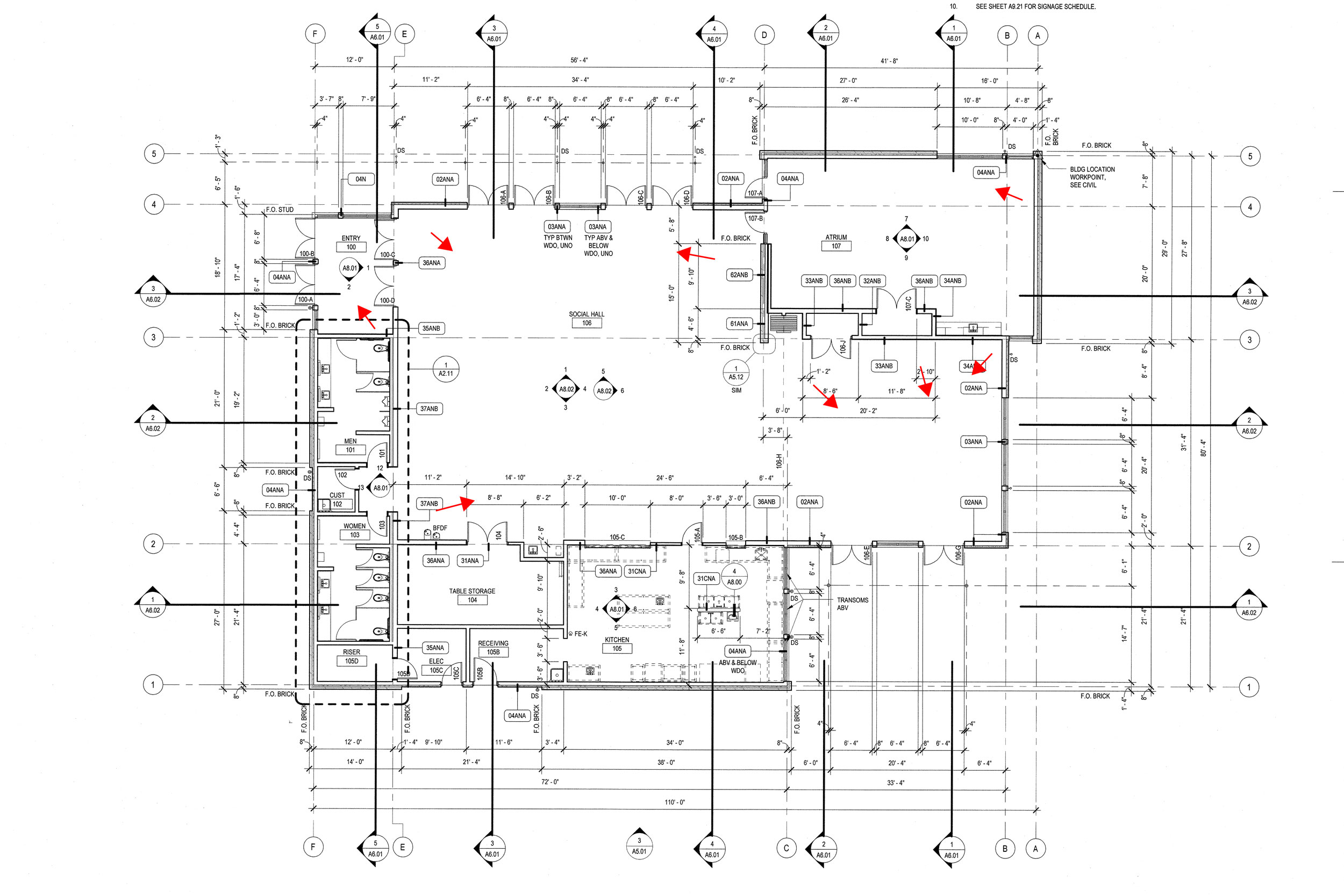

With a contemporary Library of Alexandria to pull from, it does a remarkable job with writing. It falls apart often with images, as I struggle to get it to generate realistic looking people to replace those folks that appear in my architectural photos who we are unable to get model releases for.

What it doesn't do well with is accuracy. Google has added an AI summary to the top of every research. Besides reducing traffic to websites by summarizing the content therein, it also frequently just makes stuff up.

For instance, I was recently looking for an obscure setting for one of my camera bodies, so I turned to the formerly trustworthy Google for an answer. The AI made up a menu structure and a settings-menu structure that didn't actually exist. It didn't even try to share the reference from which it pulled, because again, it just made up the answer. The correct answer was down below in one of the links from the camera manufacturer that the Google search returned. But people apparently don't bother to look at those anymore, blindly trusting what the AI spits out.

Not meaning to be all doom and gloom, but I fear that our already reduced critical thinking skills are in the process of being even further compromised by this "miraculous technology."

So, in my class this fall, we will be doing any writing exercises during class time, which, while an easy way to circumvent cheating, also uses class time that would be better suited to instruction, as written exercises were best done outside of class in the not-so-distant past.

What does this mean overall? I’ve read several articles and studies recently that point to a decline in overall cognition, problem-solving skills, and basic logic skills in regular users of AI tools, especially when used for generating written communication and for mathematical problem solving. It’s an obvious conclusion, as the human intellect is a use-it-or-lose-it proposition, as anyone who’s learned a foreign language and then lost that knowledge through lack of practice can attest.

The situation does not fill me with optimism; people have known for generations that regular exercise and good dietary habits will result in a healthier, longer lifespan, yet few people actually adhere to such advice. I expect this will be little different.

Sorry for the downer post, just needed to get it out of my system.